Before proceeding, I am going to state that I will talk about multi-threading with a user interface in a different blog. It warrants its own post, so here we will focus on pure threading concepts.

At some point in your career you will need to write a multi-threaded application, but you probably won’t do it right. Even worse, you won’t know it, and arguably you can get away with it most of the time. Some developers go their entire careers without touching multi-threading, which I have found to be more common in web development than client applications, services, or systems development. I believe it is something every programmer should understand, just like I believe every programmer should understand pointers.

My definition of a thread is a unit of work that can be scheduled for execution. A process in Windows can contain one or more threads where work is scheduled to be processed by the CPU. In processors with only one core, true concurrency doesn’t exist where two units of work can occur asynchronously. Although it appears that is the case, otherwise your system would seem unusable, a CPU is able to switch between threads fast enough that it is capable of giving each thread a fair slice of CPU time resulting in a responsive system. Processors with faster clock speeds will result in faster context switching, and ultimately, the ability to process more threads in less time. True concurrency exists on systems with processors that have more than one core, where each core is capable of processing threads independently from the other cores. Of course this all is still managed by a thread pool or scheduler which helps prioritize which threads will get CPU time and when, and their priority.

Now that you understand a bit about what a thread is, why use them? You’re using them whether you know it or not, every application already has them before you even write a line of code in your IDE, such as a thread required for a console window. Usually threads are used for long running tasks as to not block and prevent another operation from occurring. This is very common in a UI, such as Internet Explorer where you want to download a file on a separate thread so you can keep browsing the web. If this was done on the same thread, you would be blocked from doing anything until the download completes, either in full or due to cancellation. If that was the case, you wouldn’t even be able to cancel the download because the UI would be blocked as well. Other considerations might be performance to split large amounts of work up if processing power is available, or to utilize more than one core (parallel programming).

Lets take a look on how you would create a thread in C#.

static void Main()

{

Thread thread = new Thread(DoWork);

thread.Start();

}

static void DoWork()

{

}

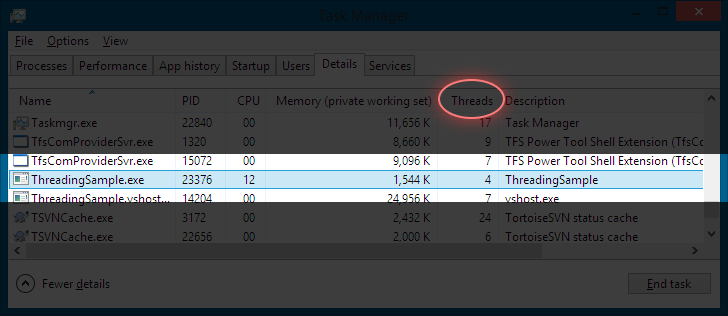

This is simple, requiring us to only create the thread and invoke Start() to begin the unit of work that will be performed by DoWork(). You can observe the number of threads in an application via Windows Task Manager. You will need to enable the threads column though; it isn’t enabled by default.

You would need a simple application that would allow you to observe this. Sometimes the thread count will be adjusted by the operating system. You need to remember that threads are already there for bare bones things the operating system needs to work, such as your console window. At the end of the day though, you should be able to observe the thread count increase using the sample below. Another note to add is you will notice that even though we are kicking off a seperate thread, the console application doesn’t exit, and we will talk about that later in the article.

static void Main()

{

Console.ReadKey();

Thread thread = new Thread(DoWork);

thread.Start();

Console.ReadKey();

}

static void DoWork()

{

while (true) ;

}

I’ll give one more example, but I think by this point the concepts should resonate. Lets look at a simple program that contains two threads for processing work.

class Program

{

static int value;

static void Main(string[] args)

{

Thread thread1 = new Thread(DoWork1),

thread2 = new Thread(DoWork2);

thread1.Start();

thread2.Start();

}

static void DoWork1()

{

while (true) Debug.Print((value++).ToString());

}

static void DoWork2()

{

while (true) Debug.Print((value -= 2).ToString());

}

}

When you run this multiple times and compare the output of each, you should immediately notice that the output varies. Here are two runs that I performed.

run one -1, 0, -3, -2, -3, -2, -4, -1, -6, -5, -4, -3

run two -1, 0, -3, -3, -2, -3, -3, -4, -3, -4, -3, -5

Understanding how multi-threading works this is understandable. Each thread gets a slice of CPU time to execute, but there is no guarantee what that exact slice will be or when it will be. All you know is that there is a guarantee of getting a slice of CPU time. Your threads are also competing with all other threads in the operating system, and by this point you should be visualizing and connecting the dots. A user complains that your software is slow and you find out that they are running another program that is consuming 90% of the available CPU, you know your threads are not getting the time slices they need or in the time that you need them.

Background and Foreground Threads

Look closely at the example program. You will notice that there is no blocking call after invoking the Thread.Start. If you caught on, you are wondering why the console application does not exit immediately since a thread is asynchronous and the main method should be returning and ending execution. The Thread class has a property called IsBackground, and this is important for you to understand. Lets look at the Msdn documentation.

A thread is either a background thread or a foreground thread. Background threads are identical to foreground threads, except that background threads do not prevent a process from terminating. Once all foreground threads belonging to a process have terminated, the common language runtime ends the process. Any remaining background threads are stopped and do not complete.

A thread by default is a foreground thread. While Main has completed its work, the runtime prevents the process from exiting until the threads have completed their work. In this case, that is not until you terminate the application because they are in an infinite while loop. You can change this behavior simply by setting IsBackground to true, which will allow the program to exit without requiring threads to complete. By doing this, the console application would exit immediately and you would need to add a blocking call, such as Console.ReadKey to allow the threads to run.

Lock

You understand by now how to create, work with, and how threads work, but what about a lock? You have probably read about it and even seen a code sample, but you have probably often wondered what I did when I originally read about a lock, which I’ll describe in a few questions.

- What is a lock?

- When is a lock appropriate to use?

- How do I use a lock correctly?

Lets look at what a lock is defined by the Msdn documentation first.

The lock keyword marks a statement block as a critical section by obtaining the mutual-exclusion lock for a given object, executing a statement, and then releasing the lock.

Now lets turn that into something more understandable.

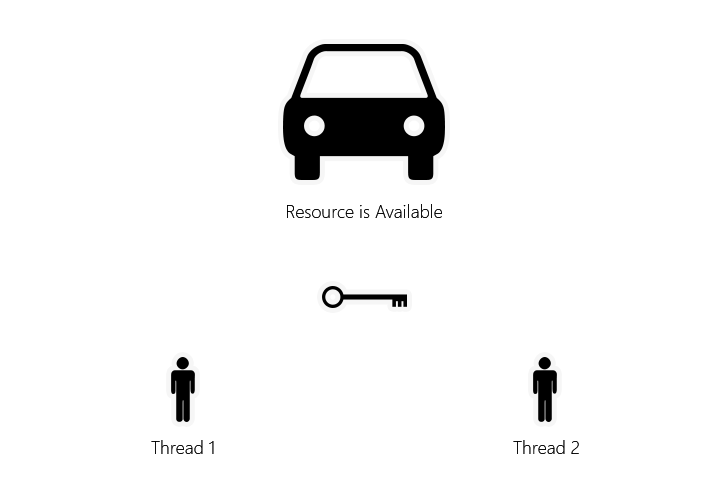

The image above is simple. You have two people but there is only one key to their only car. If person one takes the key to the car to go run an errand, person two has to wait until they come back and make that key available again before they can drive the car for their own errand. This has both a advantage and disadvantage. The advantage is that having only one key ensures that we don’t try to use the car at the same time. That seems like a silly scenario, but imagine both people running to the car and starting to fight over who gets to use it, and the argument lasts several hours. Basically, several hours were lost, and nothing was accomplished. It was a deadlock. This is the advantage of a lock, because it prevents this sort of scenario from happening by acting as a mediator and forcing each thread to wait their turn to use the resource.

The disadvantage to this is that the thread who has the mutually-exclusive lock may never complete and the lock could last forever. This is also a deadlock, and is the worst sort because the other thread will be waiting around until the lock is released, which might be never. These are the kinds of situations that can hang an application making you scratch your head.

Race Condition

Before I can show you how to use a lock or demonstrate a deadlock, we need to demonstrate a race condition which is the root problem you will solve with a lock in the first place.

class Program

{

private static List<Guid> guids = new List<Guid>();

static void Main(string[] args)

{

Thread thread1 = new Thread(DoWork1),

thread2 = new Thread(DoWork2);

thread1.Start();

thread2.Start();

while (true)

{

Console.Clear();

Console.Write(guids.Count);

}

}

static void DoWork1()

{

while (true) guids.Add(Guid.NewGuid());

}

static void DoWork2()

{

while (true) guids.Add(Guid.NewGuid());

}

}

This code will most likely result in an ArgumentOutOfRangeException. I have to say most likely because you might be, in terms of probability, 0.01% lucky enough to run the sample through without observing a race condition. It technically could happen, but the chance is extremely rare, but I have to be truthful from an educational standpoint that it is remotely possible.

Moving on, this results in a exception because List<T> is not thread-safe. Thread safety is a phrase used to describe a mechanism, component, or piece of code that was created with multi-threading in mind, and is guaranteed to work with multi-threaded code when accessed from one or more threads concurrently. Authors of thread-safe code are responsible for ensuring thread-safety, and the definition of expectations of thread-safety will vary which is where you will have to rely on documentation, if there is any. Microsoft is great about making sure they document thread-safety throughout .NET, but this may not be the case for third party code.

The threads in the sample above are accessing the list as fast as they possibly can. When you add or remove an item from a list it will automatically increase its capacity if necessary. What is happening here is the first time it needs to increase capacity, it has been accessed by the other thread which has added an item, resulting in a new capacity less than the current size. The exception message in this case is extremely articulate about the problem.

We’re going to solve this problem with a lock.

class Program

{

private static List<Guid> guids = new List<Guid>();

private static object key = new object();

static void Main(string[] args)

{

Thread thread1 = new Thread(DoWork1),

thread2 = new Thread(DoWork2);

thread1.Start();

thread2.Start();

while (true)

{

Console.Clear();

Console.Write(guids.Count);

}

}

static void DoWork1()

{

while (true)

lock (key)

guids.Add(Guid.NewGuid());

}

static void DoWork2()

{

while (true)

lock (key)

guids.Add(Guid.NewGuid());

}

}

Make sure you read the Msdn documentation on lock, I’m not going to repeat that here, but it is very important that you use an object that is private and not accessible by anything beyond your control. The lock code itself is simple and you should be able to correlate it with the picture of the people, key, and car. What you need to take from this is that key is just an object in memory that acts as the mediator that forces the threads to wait their turn before they can run their critical section of code. Re-run the sample and you may verify that the race condition is resolved.

Deadlocks

Locks are simple and as promised we can demonstrate a deadlock.

class Program

{

private static List<Guid> guids = new List<Guid>();

private static object key = new object();

static void Main(string[] args)

{

Thread thread1 = new Thread(DoWork1),

thread2 = new Thread(DoWork2);

thread1.Start();

thread2.Start();

while (true)

{

Console.Clear();

Console.Write(guids.Count);

}

}

static void DoWork1()

{

while (true)

lock (key)

while (true)

guids.Add(Guid.NewGuid());

}

static void DoWork2()

{

while (true)

lock (key)

guids.Add(Guid.NewGuid());

}

}

thread2 might get a chance to run, but when thread1 acquires a lock it will deadlock thread2 causing it to wait forever. This is because we added a infinite loop inside the lock in thread1 so it will never be released. This is a very simple demonstration of a deadlock, but the important thing to note here is a deadlock can be a single thread or many threads could be deadlocked waiting on one another; it just depends on your code.

Worthy mention: Task Parallel Library

I have written other articles on the TPL, but what you need to know is it is the modern way to start tasks that run as threads on the managed thread pool (warrants its own article). Everything I have shown you has been using System.Threading.Thread which has been around forever and a day. Let me say this now, there is nothing wrong with using Thread over Task, but the api surface that the TPL has provided has made writing multi-threaded code much easier.

I’m not going to write much more about TPL in this article, but I want to end the article leaving you with the understanding of how multi-threading works, because as you begin to use TPL, all of these concepts still apply and it’s just another layer of abstraction.

Conclusion

There is still a lot I did not cover about multi-threading. How do I know when a thread completes? How do I share thread state? What is a thread pool? What is thread synchronization? I like to say to colleagues, everything in software development is its own college degree. You can spend years learning about multi-threading all the way down to the operating systems inner workings. I recommend you do, but I hope at least this article covered some areas of multi-threading that I always see a lot of repeated questions for, and generally in my experience a lot of developers know nothing about, struggle with at first, or never fully understand it and just move on.

Happy coding!